Teaching AI to feel – the architecture of emotional intelligence

Table of Contents

What if your AI assistant could actually feel what kind of day you’re having?

Not in some creepy sci-fi way. But in a structured, measurable way that makes it respond like a human would—with actual understanding.

While building HeyJamie AI I found that the missing piece to make it feel more human was emotional intelligence. I started tracking emotional signals, measuring stress patterns. This was the start of building empathy into code.

This aligns with the broader field of affective computing pioneered by Rosalind Picard at MIT which explores how systems can recognise, interpret and adapt to human emotions1. As recent research shows, modern LLMs are surprisingly good at interpreting emotional context through language alone—and in some cases they outperform humans on emotional intelligence benchmarks.

The signal is in the noise #

Every interaction leaves a trace. How you type, the gaps between responses, meetings that spill over, and emails that lose length and nuance as your day accelerates. These are temporal signals—expressions of mood mapped across time.

The prototype aggregates calendar, messaging and email data to build a chronological emotional footprint. Not down to the minute, but close enough to model how emotional state evolves across a day or week.

Where others discard these signals as noise I treated them as data—with the potential to build something that understands how you’re actually doing.

Three pillars of machine empathy #

I boiled emotional intelligence down to three core systems—my interpretation of how to make this work in practice:

1. Indices – the numbers that matter #

Think of these as your vital signs but for cognitive and emotional state. These track indicators that correlate with stress, anxiety and executive function:

- Response latency: During non-busy periods, this measures the time between receiving and responding to an interaction.

- Linguistic complexity: Sentence length, vocabulary diversity and punctuation patterns – these often shift across the day, particularly in relation to the user’s energy or focus. Studies show more creative or complex linguistic patterns emerge during high-energy periods, while late-day fatigue correlates with simpler, less expressive language2. The assistant uses these patterns—especially degradation in complexity over time—as a soft signal of reduced executive function or stress.

- Task coherence: This is measured by the context switching inferred from event titles—essentially, how semantically related sequential calendar events are. For example, a run of unrelated 1:1s is assumed to be cognitively fragmented. Detecting this is tricky and based largely on assumptions drawn from temporal adjacency and messaging patterns. If meetings are frequently pushed back by a few minutes, it can signal overrun. These are noisy but still valuable signals.

- Temporal pressure: A composite of several indicators—meeting density, deadline proximity and schedule violations—all of which contribute to a sense of time compression and reduced autonomy. These also inform other indices above. The system infers this pressure from patterns like a high frequency of tightly packed meetings, minimal breaks between engagements, and evidence of meeting overruns or reschedulings. It’s a model of perceived time scarcity, not just clock time.

Here’s a basic output of one of the chronological indices collected throughout the day; this is a simplification of the actual data, but it explains the point in that it’s mostly 0 to 1 scoring:

{

"indices": {

"stress": 0.74,

"anxiety": 0.61,

"executive_function": 0.32,

"cognitive_load": 0.88,

"anger": 0.51,

"focus": 0.36,

"motivation": 0.42,

"energy": 0.29,

"clarity": 0.38,

"patience": 0.45

},

"contributing_factors": {

"meeting_overrun": 0.9,

"task_switching_rate": 0.7,

"linguistic_degradation": 0.6,

"short_tone_emails": 0.55,

"context_fragmentation": 0.68

}

}

These scores come from language and scheduling behaviour—not invasive sensors. Each variable draws from observed signals:

- task_switching_rate: An approximation of context switching derived from sequences of closely scheduled meetings or tasks with semantically dissimilar titles.

- meeting_overrun: Inferred when meetings are moved or bumped later possibly due to prior ones exceeding their scheduled time. This is a low-confidence signal.

- linguistic_degradation: Tracked as a reduction in sentence length or word count over time—especially in replies to the assistant—suggesting fatigue or frustration.

There are many more signals collected in the prototype but these illustrate the types of inputs used to score user state.

LLMs have shown strong performance in identifying emotional states through linguistic patterns alone34. A stress index of 0.74 might mean your sentences have become 40% shorter, you’ve switched tasks 12 times and your meetings are cascading into each other.

The goal is for the AI to see the pattern before you feel burnt out.

2. Contexts – understanding the story #

Raw numbers are meaningless without narrative. Context is about understanding why those numbers exist.

Take this sequence from a generic workday:

{

"events": [

{ "start": "09:00", "end": "10:15", "type": "customer_demo", "overrun": 15 },

{ "start": "10:00", "end": "10:45", "type": "product_review", "overrun": -15 },

{ "start": "10:30", "end": "11:30", "type": "team_standup", "overrun": 0, "declined": true },

{ "start": "11:30", "end": "12:00", "type": "customer_call", "overrun": 0 },

{ "start": "12:00", "end": "13:00", "type": "board_prep", "overrun": 30 }

]

}

Most calendars would see five meetings. What the system detects is that these meetings have repeatedly overrun—a pattern that suggests structural overload:

User is experiencing meeting cascade failure. The customer demo overran by 15 minutes creating a domino effect. User joined the product review late, declined the standup invitation and is now in the fifth consecutive hour of conversations without an appropriate break.

Language models are increasingly being used to generate these kinds of time-series summaries and emotional interpretations from sequential data4. Although the reference material is clinical and not directly applicable to this example, the concept is that language models perform increasingly well at interpreting and evaluating emotional context through language alone.

3. Levers – how the AI adapts #

These context blocks become more useful when applied to language models that are interacting with users, as it can leverage the understanding of the user’s day to provide more relevant and empathetic responses.

Understanding is worthless without action. The system adjusts its behaviour using three levers:

{

"behavioural_state": {

"compassion": 0.8,

"protectiveness": 0.9,

"initiative": 0.6

}

}

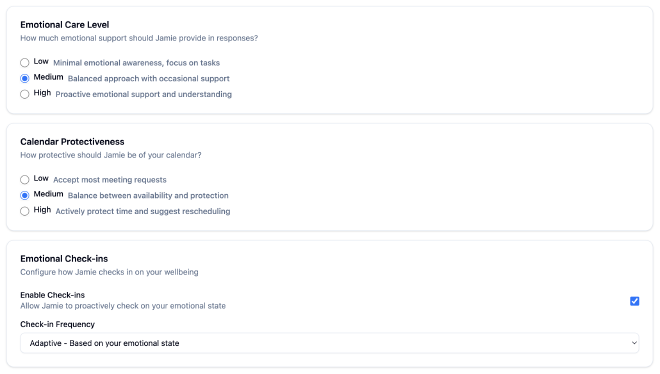

Internally for a user, this looks more controlled, like this:

These affect:

- Compassion: Softer tone, more space to think

- Protectiveness: Actively shielding you from impact

- Initiative: Likelihood the assistant will intervene without prompt

For instance:

Normal Jamie: “You have a product sync at 2pm with the engineering team. Should I send the usual agenda?”

Stressed-context Jamie: “Heads up – product sync in 30min. I’ll handle the agenda. Take 5 minutes now to breathe.”

Same information. Totally different interaction.

The architecture is deliberately complex #

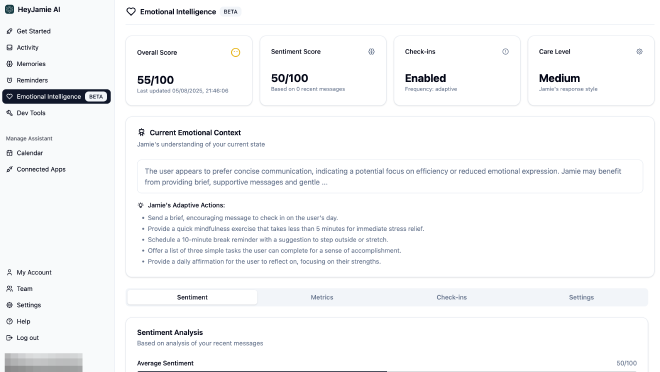

What you’re seeing here is a simplified abstraction. In reality I already have a working prototype of this system inside HeyJamie. It’s live actively processing my own data and providing emotional context to the AI.

The JSON examples above represent just a thin surface layer—the prototype tracks dozens of signals across behaviour, language, time and sequence. It builds personalised baselines, applies decay models and dynamically reweighs interactions based on time of day, meeting type, prior context and more. At this moment, that’s in 6 hour increments looking back at the last 24 hours and the last 7 days.

{

"user_profile": {

"baseline_stress": 0.3,

"peak_performance_window": "09:00-11:00",

"cognitive_recovery_rate": 0.4,

"social_interaction_cost": 0.15,

"context_switch_penalty": 0.25

},

"environmental_factors": {

"day_of_week_modifier": { "monday": 1.2, "friday": 0.8 },

"seasonal_adjustment": 0.1,

"timezone_shifts": 0

}

}

LLMs have proven capable of not just recognising emotions but suggesting helpful timely interventions5. They’ve even outperformed human physicians in patient-facing empathy in controlled studies.

It’s deeply weird. But somehow feels deeply human. You’re taught that computers should be logical and neutral. But maybe that’s the wrong default.

When an AI notices I’m overwhelmed and quietly handles things it feels less like software and more like a really good assistant who just gets it.

Trust isn’t built on perfection. It’s built on appropriate response.

The uncomfortable truth #

This works too well. When your AI notices your stress before your partner does it’s either the future of human-computer interaction—or a mirror held up to how poorly we support each other–or another Black Mirror episode.

Maybe all three.

A note on privacy and ethics #

Let’s be clear: emotional AI at scale is a privacy minefield. Systems that apply this publicly—like Amazon’s emotion-detecting Rekognition trials or facial analysis in UK train stations—have rightly drawn fire6.

But there’s a crucial distinction here. Jamie operates on a fundamentally different privacy model:

Your data stays yours. Unlike public surveillance systems or corporate emotion tracking, Jamie processes everything locally. The raw conversations, calendar entries and email content never leave your control. What gets collected for system improvement is only aggregated, anonymised summary data—stress indices, response patterns, behavioural trends—stripped of any identifying information or actual content.

Transparency by design. You can see exactly what emotional indices are being tracked. No black box algorithms making decisions about your emotional state without your understanding.

Consent isn’t just a checkbox. The system requires explicit opt-in for emotional tracking features. You can disable them entirely, adjust sensitivity levels or exclude specific data sources. Your assistant works fine without them—they just make it work better.

Summary data protection. It’s used solely for understanding usage patterns and improving emotional response from the language models. No individual user’s emotional patterns can be reconstructed from this data.

The uncomfortable reality is that truly empathetic AI requires understanding emotional context. The question isn’t whether to build these systems—it’s how to build them responsibly. By keeping raw data under user control and limiting collection to anonymous summaries, we can develop AI that genuinely helps without compromising privacy.

This isn’t surveillance. It’s support, built on a foundation of user control and transparency.

If you want to experience emotionally-aware AI yourself, request early access at heyjamie.ai. I’m rolling it out slowly and deliberately.

Additional Reading #

Somers, M. (2019). Emotion AI, explained. MIT Sloan.

Abrosymova, K. (2019). How Artificial Intelligence and Journaling Make Us More Aware of Our Emotions. The Writing Cooperative.

References #

Picard, R. W. (1997). Affective Computing. MIT Press. ↩︎

Tandoc, M. C., Bayda, M., Poskanzer, C., Cho, E., Cox, R., Stickgold, R., & Schapiro, A. C. (2021). Examining the effects of time of day and sleep on generalization. PLOS ONE. ↩︎

Schlegel, K. et al. (2025). Large language models are proficient in solving and creating emotional intelligence tests. Communications Psychology. ↩︎

Lalk, C. et al. (2025). Employing large language models for emotion detection in psychotherapy transcripts. Frontiers in Psychiatry. ↩︎ ↩︎

JMIR Editorial Board. (2024). Large Language Models and Empathy: Systematic Review. Journal of Medical Internet Research. ↩︎

For more on privacy concerns with emotion AI surveillance systems, see various reports on Amazon’s Rekognition and UK facial analysis trials. ↩︎